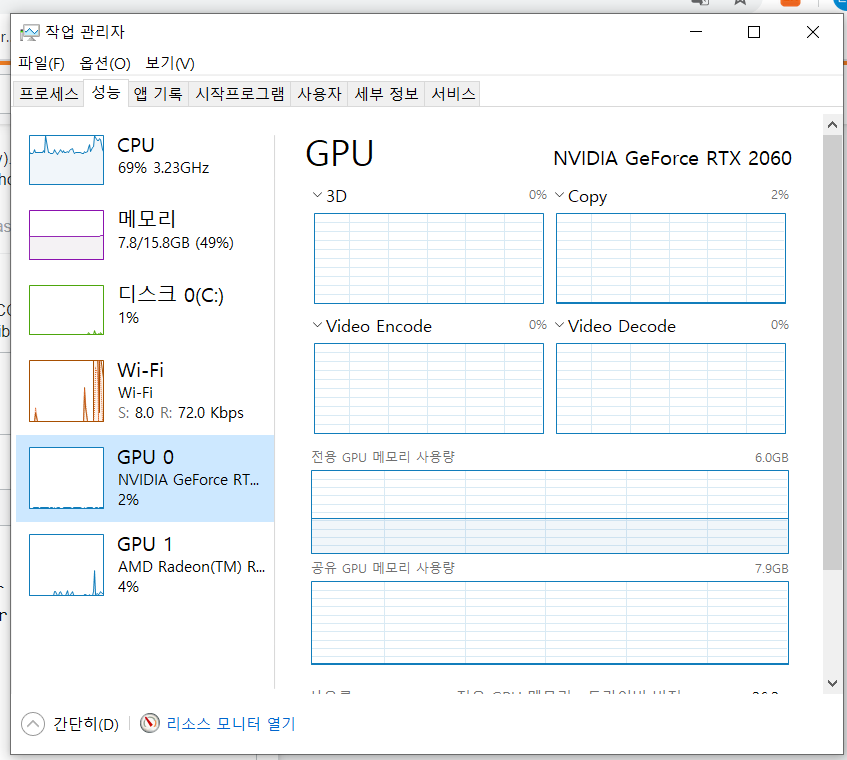

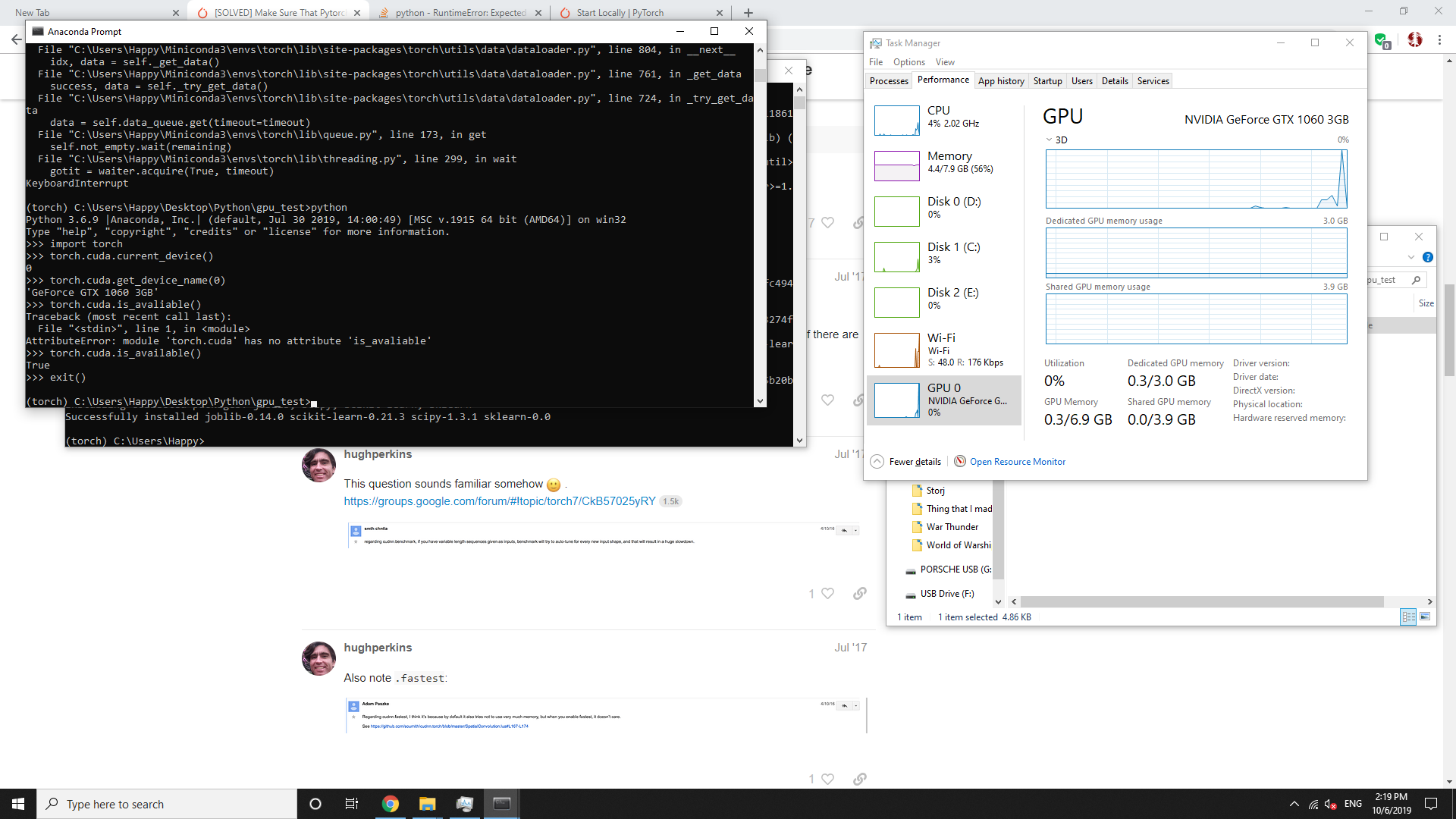

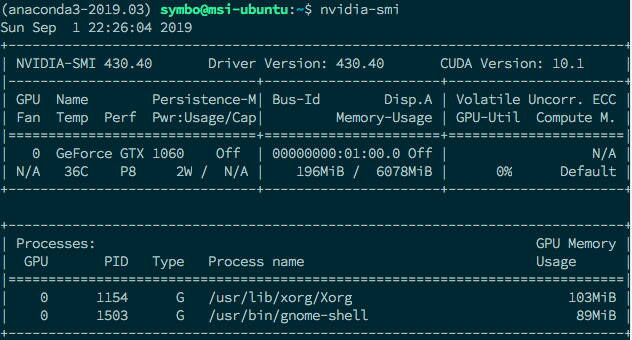

Use GPU in your PyTorch code. Recently I installed my gaming notebook… | by Marvin Wang, Min | AI³ | Theory, Practice, Business | Medium

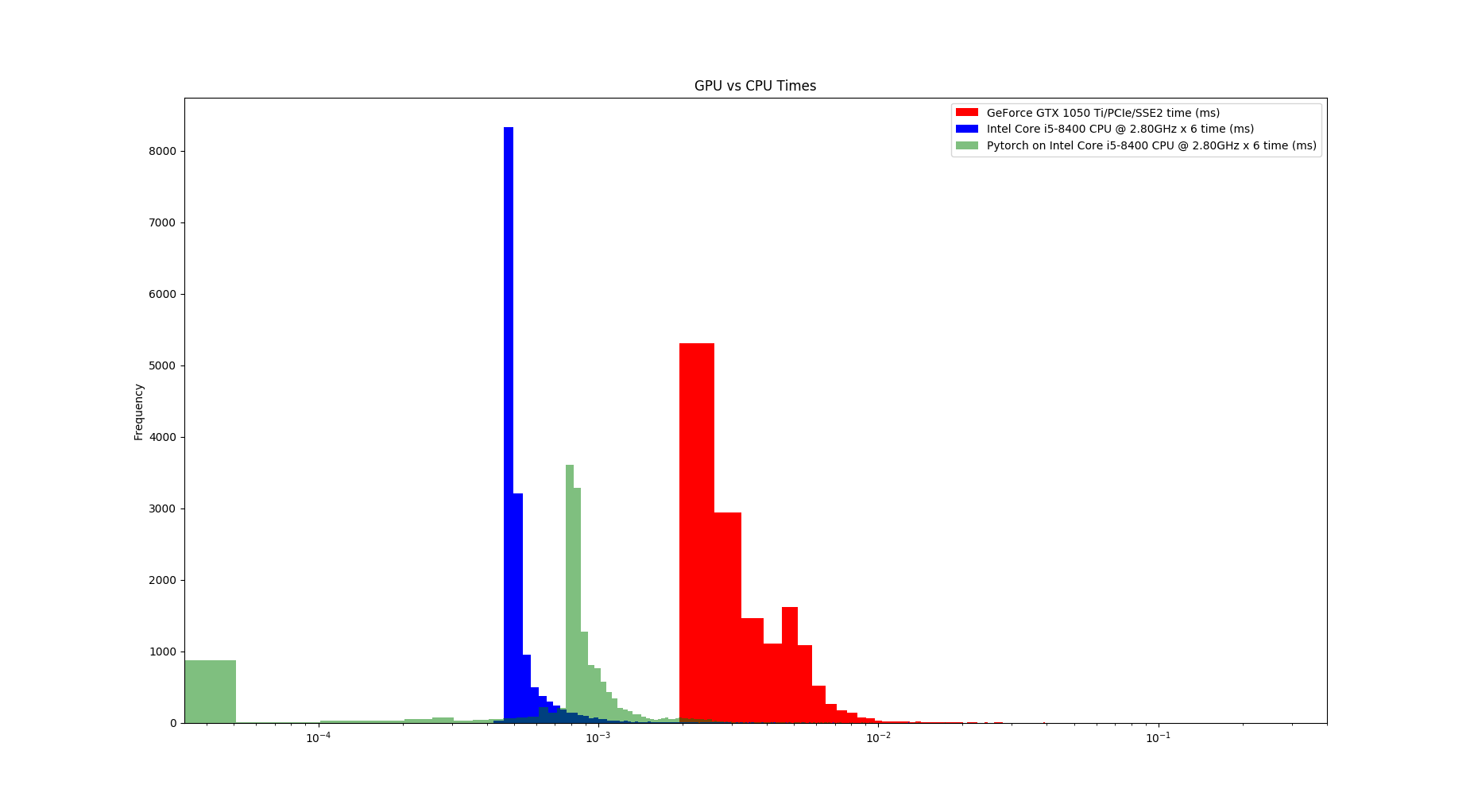

machine learning - How to make custom code in python utilize GPU while using Pytorch tensors and matrice functions - Stack Overflow

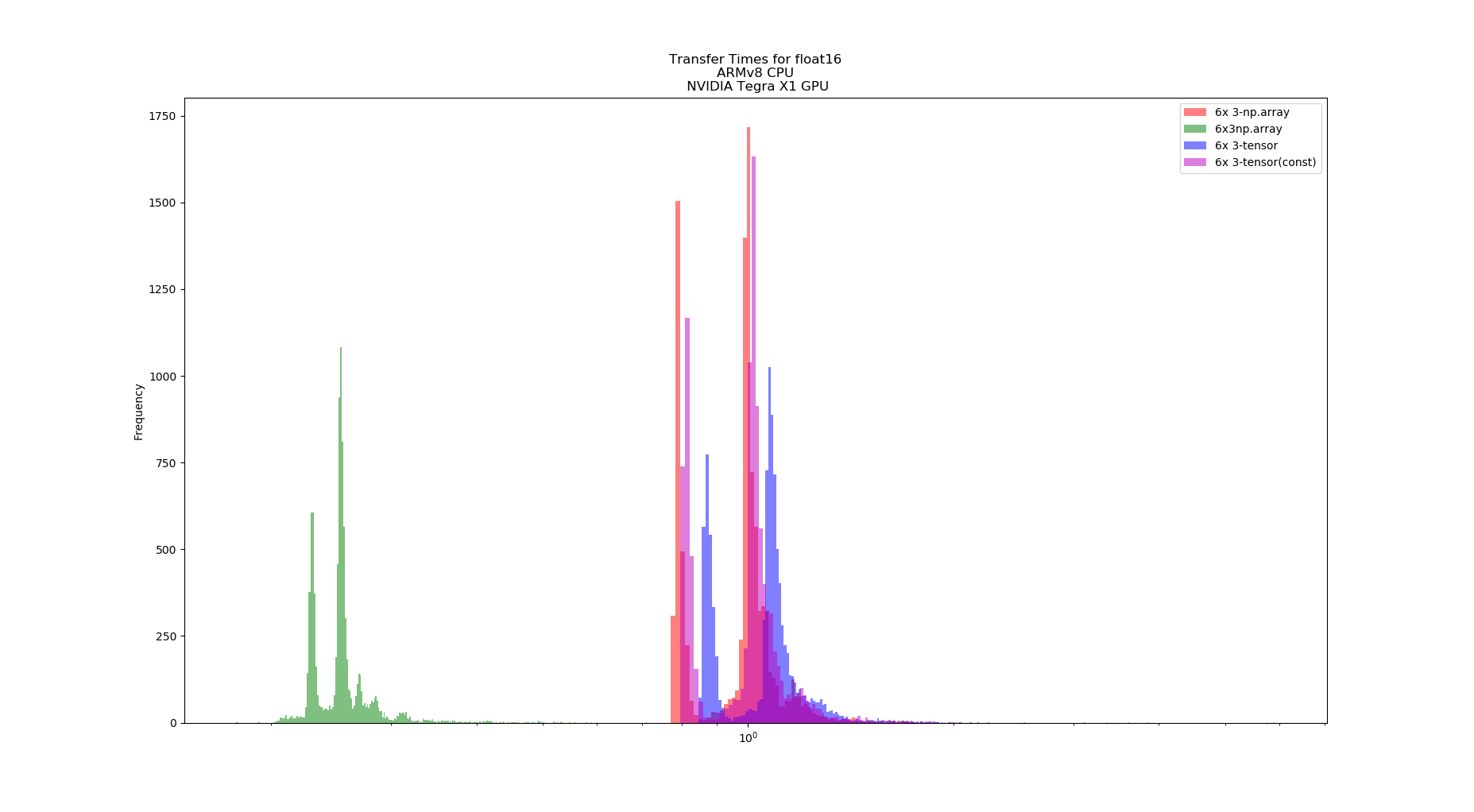

Accelerate computer vision training using GPU preprocessing with NVIDIA DALI on Amazon SageMaker | AWS Machine Learning Blog

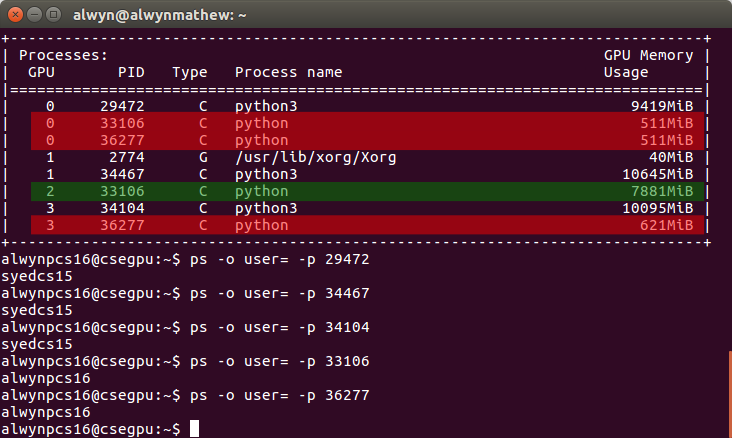

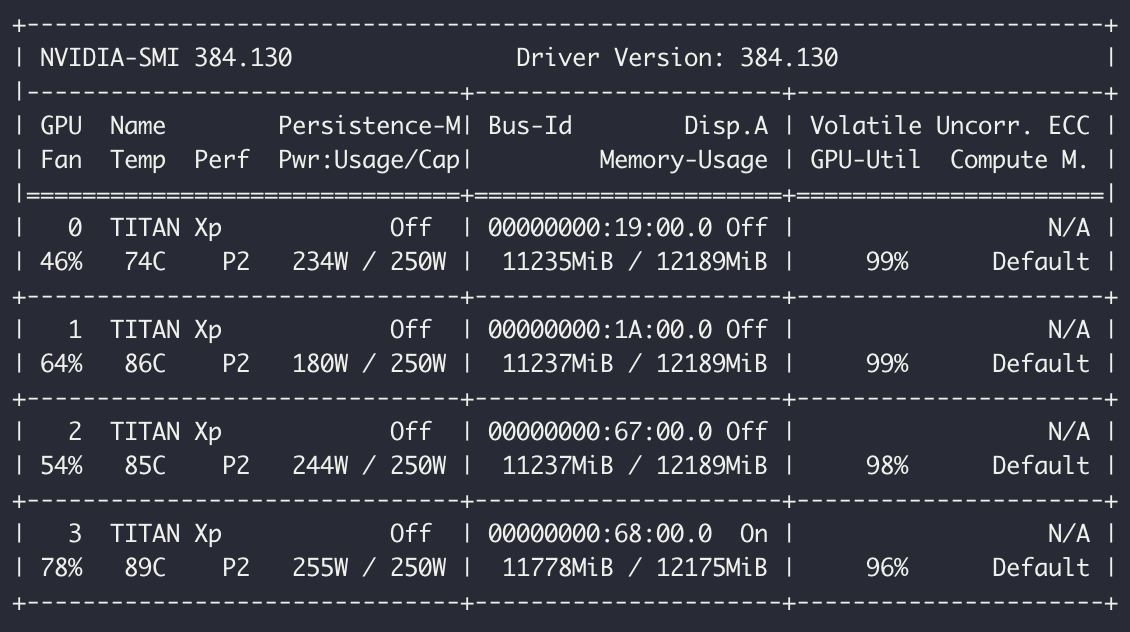

Learn PyTorch Multi-GPU properly. I'm Matthew, a carrot market machine… | by The Black Knight | Medium

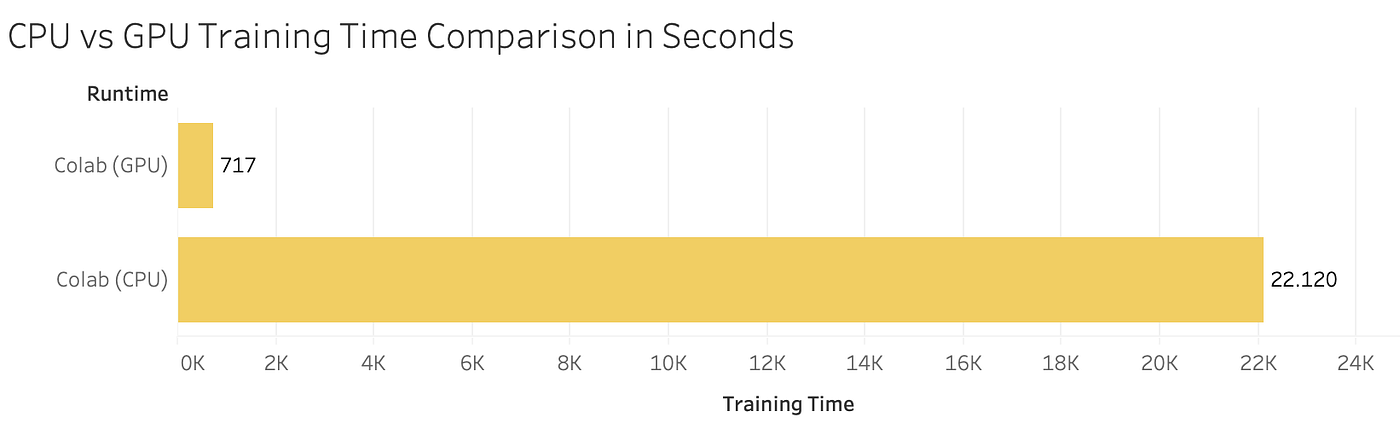

PyTorch: Switching to the GPU. How and Why to train models on the GPU… | by Dario Radečić | Towards Data Science