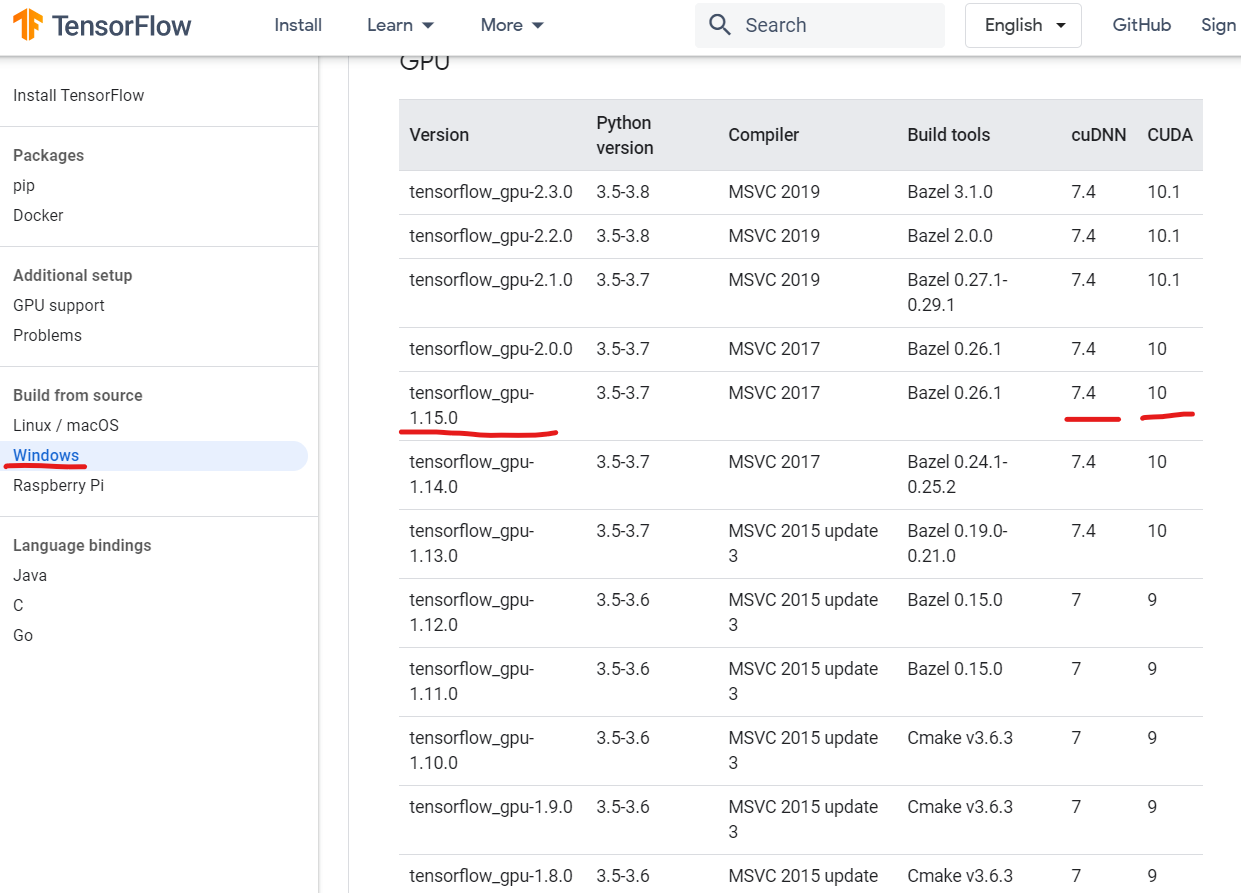

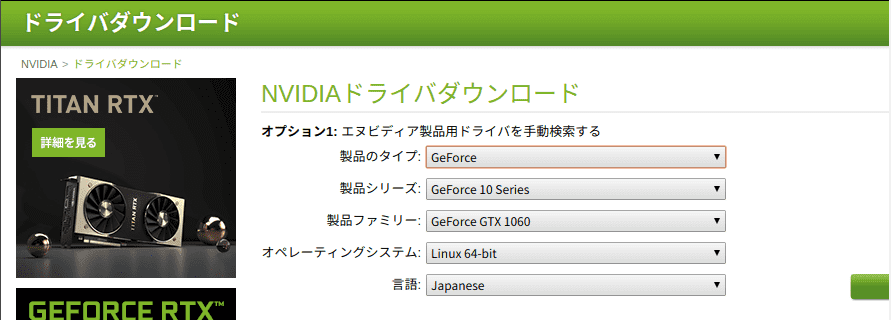

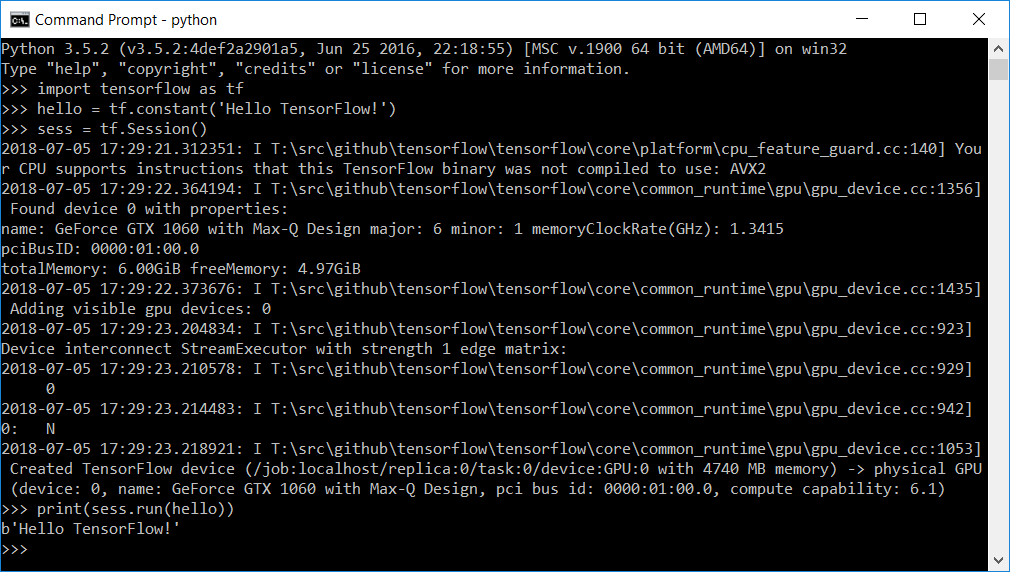

Tensorflow recognized my GPU which is GTX 1060, but is using my CPU to train · Issue #20251 · tensorflow/tensorflow · GitHub

Is it necessary to have NVIDIA graphics to get started with TensorFlow? What can AMD users do? - Quora

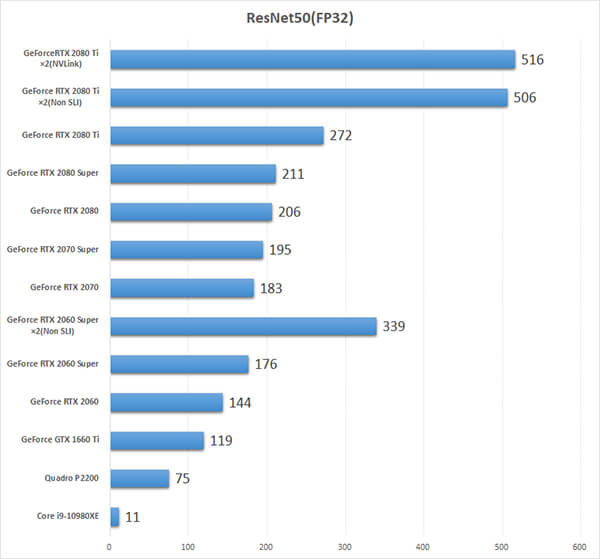

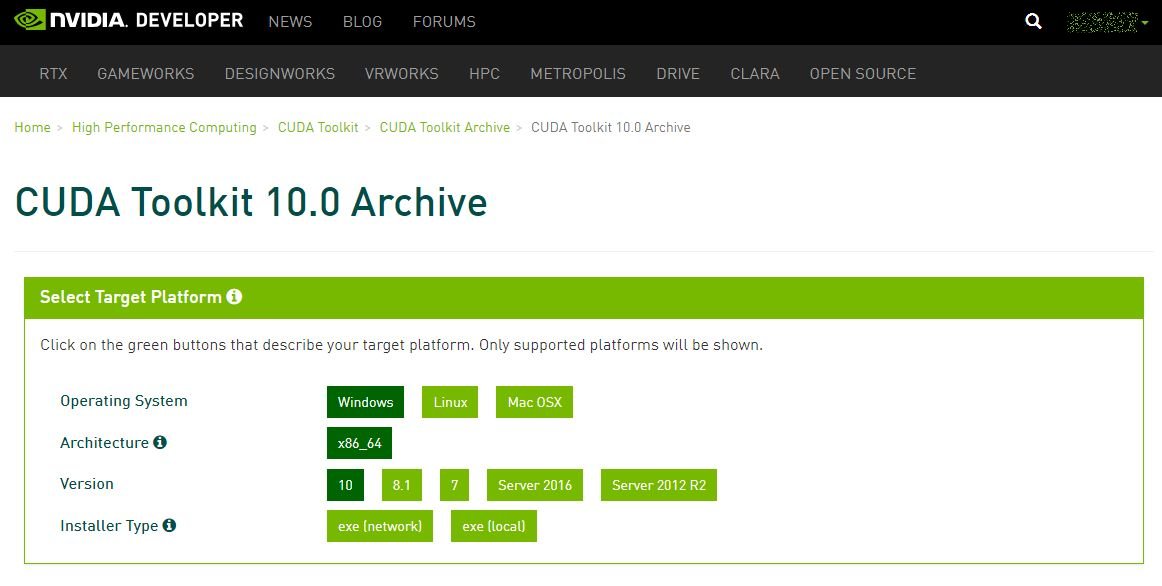

TensorFlow Performance with 1-4 GPUs -- RTX Titan, 2080Ti, 2080, 2070, GTX 1660Ti, 1070, 1080Ti, and Titan V